Introduction

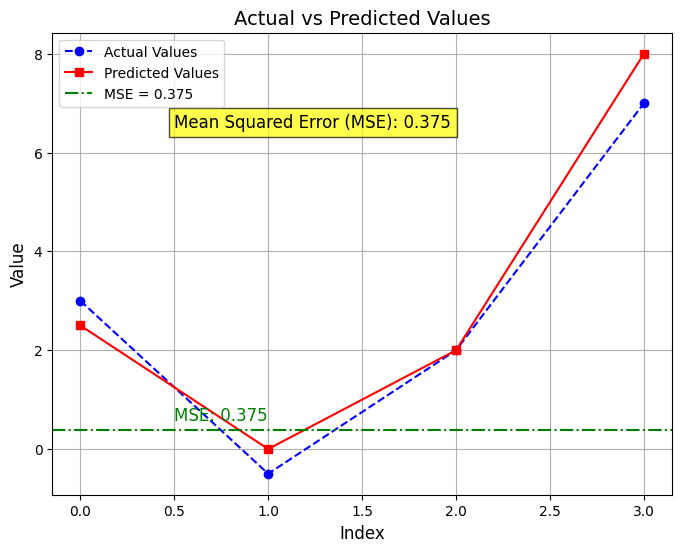

When working with machine learning models, especially in regression tasks, it’s crucial to evaluate how well your model is performing. One of the most commonly used metrics for this purpose is Mean Squared Error (MSE). In this blog, we’ll break down what MSE is, why it’s important, and walk through a simple Python example to help you calculate and visualize MSE.

What is Mean Squared Error (MSE)?

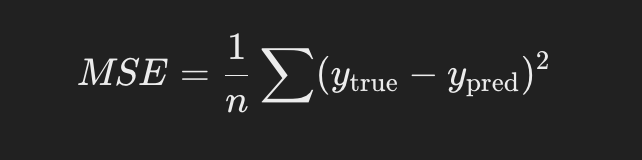

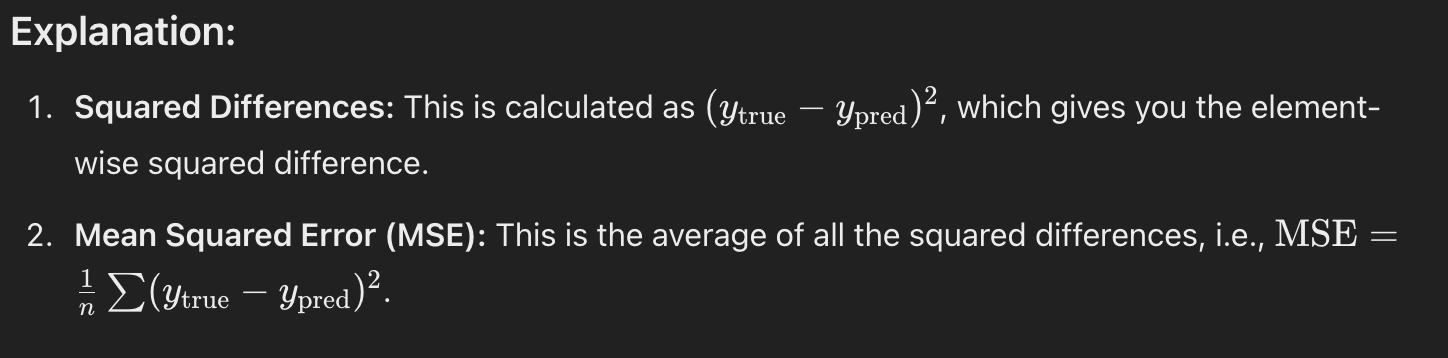

Mean Squared Error is a metric used to measure the average of the squares of the errors between predicted values and actual values. Essentially, it shows how far the model’s predictions are from the true values. The formula for MSE is:

Mean Squared Error (MSE): The cost function for linear regression. It calculates the average squared difference between the predicted and actual values.

- Tip: Square the errors to make sure negative and positive differences don’t cancel out.

Here’s a sample Python code that demonstrates how to calculate the squared difference between the predicted and actual values and then find the average of the squared differences (i.e., Mean Squared Error, MSE):

There are 0 comments